Linear01値からDepth値を求める

環境

Unity2022.3.1f1

概要

Linear01Depth(float z)の逆の関数です。参考のままです。

C#でテスト。同じ値になりました。

using UnityEngine;

public class TestDepthValue : MonoBehaviour

{

Vector4 _ZBufferParams;

void Start()

{

_ZBufferParams = Shader.GetGlobalVector("_ZBufferParams");

var depth = 0.5f;

var linear01 = Linear01Depth(depth);

Debug.Log($"1 linear01:{depth}>{linear01}");

depth = DepthFromLinear01(linear01);

Debug.Log($"1 linear01:{linear01}>{depth}");

linear01 = 0.2345f;

depth = DepthFromLinear01(linear01);

Debug.Log($"2 linear01:{linear01}>{depth}");

linear01 = Linear01Depth(depth);

Debug.Log($"2 linear01:{depth}>{linear01}");

depth = 0.5f;

var linearEye = LinearEyeDepth(depth);

Debug.Log($"3 linearEye:{depth}>{linearEye}");

depth = DepthFromLinearEye(linearEye);

Debug.Log($"3 linear01:{linearEye}>{depth}");

linearEye = 0.4567f;

depth = DepthFromLinearEye(linearEye);

Debug.Log($"4 linear01:{linearEye}>{depth}");

linearEye = LinearEyeDepth(depth);

Debug.Log($"4 linearEye:{depth}>{linearEye}");

}

float Linear01Depth(float z)

{

return 1.0f / (_ZBufferParams.x * z + _ZBufferParams.y);

}

float DepthFromLinear01(float linear01)

{

//linear01といっても1.0f / _ZBufferParams.y未満にはならないと思われる

//return (1.0f / ( linear01 * _ZBufferParams.x)) - (_ZBufferParams.y / _ZBufferParams.x);

//return ((1.0f / linear01) - _ZBufferParams.y) / _ZBufferParams.x;

return (1.0f - _ZBufferParams.y * linear01) / (_ZBufferParams.x * linear01);

}

float LinearEyeDepth(float z)

{

return 1.0f / (_ZBufferParams.z * z + _ZBufferParams.w);

}

float DepthFromLinearEye(float linearEye)

{

//return ((1.0f / linearEye) - _ZBufferParams.w) / _ZBufferParams.z;

return (1.0f - _ZBufferParams.w * linearEye) / (_ZBufferParams.z * linearEye);

}

}

参考

Linear01Depth()で線形にした深度値を元の値に戻したい #Unity - Qiita

DecodeDepthNormal/Linear01Depth/LinearEyeDepth explanations - Unity Forum

unsafeでアンマネージドな構造体でポインタアクセスとポリモーフィズムのテスト

環境

Unity2022.3.1f1

概要

構造体は値渡しとなりアクセスしづらいです。なのでunsafeでポインタを使って直接アクセスしたい。

そしてポリモーフィズム的な事をしたい。NativeArrayで一緒くたにアクセスしたい。

ポリモーフィズムの部分は具体的には以下のような事をしたいです。(Classでの例)

public abstract class Animal

{

public abstract void Sound();

}

public class Dog : Animal

{

public override void Sound()

{

Debug.Log("ワンワン");

}

}

public class Cat : Animal

{

public override void Sound()

{

Debug.Log("ナーゴ");

}

}

var animals = new Animal[]{ new Dog(), new Cat() };

for(int i = 0; i < animals.Length; i++)

animals[i].Sound();

Marshal.Alloc版(失敗)

for内のインターフェースを取得する部分でエラーになります。

IntPtrからインターフェスの参照を得る方法が見つかりませんでした。

そもそも構造体として生成されていないです。

public interface ITestAnimal

{

void Sound();

}

public struct TestDog : ITestAnimal

{

public int value;

public void Sound()

{

Debug.Log($"ワンワン:{value}");

}

}

public struct TestCat : ITestAnimal

{

public int value;

public void Sound()

{

Debug.Log($"ナーゴ:{value}");

}

}

var test3Animals = new NativeArray<IntPtr>(2, Allocator.Persistent);

test3Animals[0] = Marshal.AllocCoTaskMem(sizeof(TestDog));

((TestDog*)test3Animals[0])->value = 321;

test3Animals[1] = Marshal.AllocCoTaskMem(sizeof(TestCat));

((TestCat*)test3Animals[1])->value = 654;

for(int i = 0; i < test3Animals.Length; i++)

{

ITestAnimal animal = *((ITestAnimal*)test3Animals[i]);

animal.Sound();

}

for(int i = 0; i < test3Animals.Length; i++)

Marshal.FreeCoTaskMem(test3Animals[i]);

test3Animals.Dispose();

GCHandle.Alloc版

直接アクセスもポリモーフィズムもできるようになりました。

public interface ITestAnimal

{

void Sound();

}

public struct TestDog : ITestAnimal

{

public int value;

public void Sound()

{

Debug.Log($"ワンワン:{value}");

}

}

public struct TestCat : ITestAnimal

{

public int value;

public void Sound()

{

Debug.Log($"ナーゴ:{value}");

}

}

var testAnimals = new NativeArray<GCHandle>(2, Allocator.Persistent);

testAnimals[0] = GCHandle.Alloc(new TestDog(), GCHandleType.Pinned);

testAnimals[1] = GCHandle.Alloc(new TestCat(), GCHandleType.Pinned);

var t0 = (TestDog*)testAnimals[0].AddrOfPinnedObject();

t0->value = 123;

var t1 = (TestCat*)testAnimals[1].AddrOfPinnedObject();

t1->value = 456;

for(int i = 0; i < testAnimals.Length; i++)

((ITestAnimal)testAnimals[i].Target).Sound();

for(int i = 0; i < testAnimals.Length; i++)

testAnimals[i].Free();

testAnimals.Dispose();

内包

内包を継承元と見立てて行ってみました。

直接アクセスもポリモーフィズムもできるようになりました。

インターフェースではないので共通の変数も持てます。

[StructLayout(LayoutKind.Sequential)]

public unsafe struct Test2Animal

{

public enum AnimalType

{

Dog,

Cat,

}

public AnimalType type;

public delegate void CallBackSound(Test2Animal* pAnimal);

private IntPtr sound;

public void SetDelegateSound(CallBackSound func)

{

sound = Marshal.GetFunctionPointerForDelegate(func);

}

public void Sound()

{

var func = Marshal.GetDelegateForFunctionPointer<CallBackSound>(sound);

fixed(Test2Animal* pAnimal = &this)

{

func.Invoke(pAnimal);

}

}

}

[StructLayout(LayoutKind.Sequential)]

public unsafe struct Test2Dog

{

public Test2Animal animal;

public int iv;

public void Setup()

{

animal.type = Test2Animal.AnimalType.Dog;

animal.SetDelegateSound(static (pAnimal) => { ((Test2Dog*)pAnimal)->Sound(); });

iv = 0;

}

public void Sound()

{

Debug.Log($"ワンワン:{iv}");

}

}

[StructLayout(LayoutKind.Sequential)]

public unsafe struct Test2Cat

{

public Test2Animal animal;

public float fv;

public void Setup()

{

animal.type = Test2Animal.AnimalType.Cat;

animal.SetDelegateSound(static (pAnimal) => { ((Test2Cat*)pAnimal)->Sound(); });

fv = 0.0f;

}

public void Sound()

{

Debug.Log($"ナーゴ:{fv}");

}

}

var test2Animals = new NativeArray<IntPtr>(2, Allocator.Persistent);

test2Animals[0] = Marshal.AllocCoTaskMem(sizeof(Test2Dog));

((Test2Dog*)test2Animals[0])->Setup();

test2Animals[1] = Marshal.AllocCoTaskMem(sizeof(Test2Cat));

((Test2Cat*)test2Animals[1])->Setup();

((Test2Dog*)test2Animals[0])->iv = 123;

((Test2Cat*)test2Animals[1])->fv = 4.56f;

for(int i = 0; i < test2Animals.Length; i++)

((Test2Animal*)test2Animals[i])->Sound();

for(int i = 0; i < test2Animals.Length; i++)

Marshal.FreeCoTaskMem(test2Animals[i]);

test2Animals.Dispose();

関連

【C#】メモリレイアウトを制御できるStructLayout &LayoutKind.Explicitを用いて別の型として解釈する(あとUnsafe.Asを利用した例も) - はなちるのマイノート

ジャイロ(Gyro)で視差

環境

Unity2022.3.1f1

概要

ジャイロを使用した以下のツイートのような事をしたかったので試してみました。

スマホをひっくり返すと後部が見えます。

プログラムには傾斜錐台を使用しています。

Loco Looper’s sweet holographic effect on iPhone and iPad.

— James Vanas (@jamesvanas) 2021年8月20日

It’s tough going back to flat 2D games after this!#screenshotsaturday pic.twitter.com/5X26INPgda

コード

using UnityEngine;

using TMPro;

public class ObliqueProjection : MonoBehaviour

{

[SerializeField] private Camera _camera = null;

[SerializeField] private TextMeshProUGUI _text = null;

[SerializeField] [Range(-1f,1f)] private float _horizontal = 0.0f;

[SerializeField] [Range(-1f,1f)] private float _vertical = 0.0f;

private Vector3 _org_position;

private float _distance;

void OnEnable()

{

_org_position = _camera.transform.localPosition;

_distance = _org_position.magnitude;

Input.gyro.enabled = true;

#if UNITY_EDITOR

Input.gyro.enabled = false;

#endif

}

void OnDisable()

{

}

void LateUpdate()

{

if(Input.gyro.enabled == true)

{

const float scale = 1.0f;

const float offset = 1.0f;

_horizontal = Mathf.Clamp(Input.gyro.gravity.x * scale, -1.0f, 1.0f);

_vertical = Mathf.Clamp(Input.gyro.gravity.y * scale + offset, -1.0f, 1.0f);

}

var cam = _camera;

var rect = cam.pixelRect;

var ratio = (float)rect.height / (float)rect.width;

_text.text = $"horizontal:{_horizontal} vertical:{_vertical}";

cam.ResetProjectionMatrix();

var proj = cam.projectionMatrix;

proj.m02 = _horizontal * ratio;

proj.m12 = _vertical;

cam.projectionMatrix = proj;

var tan = Mathf.Tan(cam.fieldOfView*0.5f*Mathf.Deg2Rad);

_camera.transform.localPosition = _org_position + new Vector3(-_horizontal * _distance * tan, 0.0f, -_vertical * _distance * tan);

}

}

参考

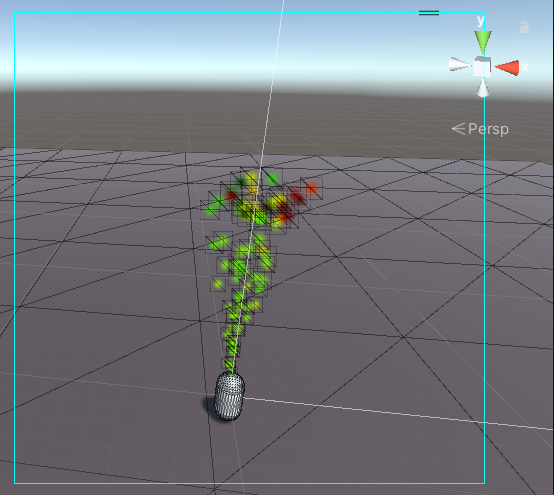

炎のシミュレーション2

環境

Unity2022.3.1f1

概要

以前作った炎のシミュレーションの外力部分をパーティクルにしたもののテストです。

パーティクルと同じ位置、見え方で炎を表示するために、シザープロジェクション行列を用いています。

炎のシェーダはフローマップとディティール用のボロノイ的なテクスチャを加えてそれっぽい感じにしたものです。

コード

using System;

using UnityEditor;

using UnityEngine;

using UnityEngine.UI;

using static UnityEngine.GraphicsBuffer;

public unsafe class TestFluid5 : MonoBehaviour

{

[SerializeField] private Transform _target = null;

[SerializeField] Material _material = null;

[SerializeField] private ComputeShader _compute = null;

[SerializeField] private RawImage _densityPreview = null;

[SerializeField] private RawImage _velocityPreview = null;

[SerializeField] private RawImage _forcePreview = null;

[SerializeField] private Camera _camera = null;

[SerializeField] private Camera _forceCamera = null;

[SerializeField] private float _planeSize = 1.0f;

[SerializeField] private Vector3 _pivot = Vector3.zero;

[SerializeField] private float _attenuation = 0.99f;

[SerializeField] private float _deisityAttenuation = 0.985f;

[SerializeField] private int _simTexSize = 64;

[SerializeField] private int _densityTexSize = 256;

private RenderTexture _divergenceTexture = null;

private DoubleBufferdRenderTexture _velocityBuffer = null;

private DoubleBufferdRenderTexture _pressureBuffer = null;

private DoubleBufferdRenderTexture _densityBuffer = null;

private RenderTexture _forceTexture = null;

private int _knUpdateAdvection;

private int _knInteractionForce;

private int _knInteractionDensityForce;

private int _knUpdateDivergence;

private int _knUpdatePressurePsfH;

private int _knUpdatePressurePsfV;

private int _knUpdateVelocity;

private int _knUpdateDensity;

private static int _spTime = Shader.PropertyToID("_Time");

private static int _spDeltaTime = Shader.PropertyToID("_DeltaTime");

private static int _spSourceVelocity = Shader.PropertyToID("_SourceVelocity");

private static int _spResultVelocity = Shader.PropertyToID("_ResultVelocity");

private static int _spSourcePressure = Shader.PropertyToID("_SourcePressure");

private static int _spSourceForce = Shader.PropertyToID("_SourceForce");

private static int _spResultPressure = Shader.PropertyToID("_ResultPressure");

private static int _spResultDivergence = Shader.PropertyToID("_ResultDivergence");

private static int _spSourceDensity = Shader.PropertyToID("_SourceDensity");

private static int _spResultDensity = Shader.PropertyToID("_ResultDensity");

private static int _spSimWidth = Shader.PropertyToID("_SimWidth");

private static int _spSimHeight = Shader.PropertyToID("_SimHeight");

private static int _spSimForceStart = Shader.PropertyToID("_SimForceStart");

private static int _spDensityForceStart = Shader.PropertyToID("_DensityForceStart");

private static int _spAttenuation = Shader.PropertyToID("_Attenuation");

private static int _spDeisityAttenuation = Shader.PropertyToID("_DeisityAttenuation");

private static int _spDensityWidth = Shader.PropertyToID("_DensityWidth");

private static int _spDensityHeight = Shader.PropertyToID("_DensityHeight");

private static int _spVectorFieldTex = Shader.PropertyToID("_VectorFieldTex");

private static int _spDensityTex = Shader.PropertyToID("_DensityTex");

private GraphicsBuffer _gbVertices = null;

private GraphicsBuffer _gbWorldPositions = null;

private Bounds _bounds;

private Vector2Int _simFirePos = new Vector2Int(0, 2);

private static int _spVertices = Shader.PropertyToID("_Vertices");

private static int _spWorldPositions = Shader.PropertyToID("_WorldPositions");

private static int _spDepthPosWS = Shader.PropertyToID("_DepthPosWS");

private void Start()

{

Setup();

}

private void OnValidate()

{

_simTexSize = Mathf.Max(_simTexSize, 8);

_densityTexSize = Mathf.Max(_densityTexSize, 8);

DestroyBuffers();

Setup();

}

private void OnDestroy()

{

DestroyBuffers();

if(_gbVertices != null)

_gbVertices.Dispose();

if(_gbWorldPositions != null)

_gbWorldPositions.Dispose();

}

private void Setup()

{

_camera.depthTextureMode |= DepthTextureMode.Depth;

CreateBuffers();

_knUpdateAdvection = _compute.FindKernel("UpdateAdvection");

_knInteractionForce = _compute.FindKernel("InteractionForce");

_knInteractionDensityForce = _compute.FindKernel("InteractionDensityForce");

_knUpdateDivergence = _compute.FindKernel("UpdateDivergence");

_knUpdatePressurePsfH = _compute.FindKernel("UpdatePressurePsfH");

_knUpdatePressurePsfV = _compute.FindKernel("UpdatePressurePsfV");

_knUpdateVelocity = _compute.FindKernel("UpdateVelocity");

_knUpdateDensity = _compute.FindKernel("UpdateDensity");

_gbVertices = new GraphicsBuffer(Target.Structured, 4, sizeof(Vector2));

var vertices = new Vector2[]

{

new Vector2(-0.5f, -0.5f),

new Vector2(-0.5f, 0.5f),

new Vector2( 0.5f, 0.5f),

new Vector2( 0.5f, -0.5f)

};

_gbVertices.SetData(vertices);

_material.SetBuffer(_spVertices, _gbVertices);

_bounds = new Bounds(Vector3.zero, new Vector3(10000.0f, 10000.0f, 10000.0f));

_gbWorldPositions = new GraphicsBuffer(Target.Structured, UsageFlags.LockBufferForWrite, 4, sizeof(Vector3));

_material.SetBuffer(_spWorldPositions, _gbWorldPositions);

}

private void CreateBuffers()

{

_velocityBuffer = DoubleBufferdRenderTexture.Create(new RenderTextureDescriptor(_simTexSize, _simTexSize, RenderTextureFormat.ARGBHalf, 0,0){enableRandomWrite = true});

_pressureBuffer = DoubleBufferdRenderTexture.Create(new RenderTextureDescriptor(_simTexSize, _simTexSize, RenderTextureFormat.ARGBHalf, 0,0){enableRandomWrite = true});

_densityBuffer = DoubleBufferdRenderTexture.Create(new RenderTextureDescriptor(_densityTexSize, _densityTexSize, RenderTextureFormat.ARGBHalf, 0,0){enableRandomWrite = true});

_divergenceTexture = new RenderTexture(_simTexSize, _simTexSize, 0, RenderTextureFormat.ARGBHalf, RenderTextureReadWrite.Linear);

_divergenceTexture.enableRandomWrite = true;

_divergenceTexture.Create();

_forceTexture = new RenderTexture(_simTexSize, _simTexSize, 0, RenderTextureFormat.ARGB32, RenderTextureReadWrite.Linear);

_forceTexture.filterMode = FilterMode.Point;

_forceCamera.targetTexture = _forceTexture;

_forceTexture.Create();

}

private void DestroyBuffers()

{

if(_divergenceTexture != null)

_divergenceTexture.Release();

if(_velocityBuffer != null)

_velocityBuffer.Dispose();

if(_pressureBuffer != null)

_pressureBuffer.Dispose();

if(_densityBuffer != null)

_densityBuffer.Dispose();

if(_forceTexture != null)

_forceTexture.Release();

}

private static void SetScissorRect(Camera cam, bool isAdjust, ref Rect r)

{

if(isAdjust == true)

{

if ( r.x < 0 )

{

r.width += r.x;

r.x = 0;

}

if ( r.y < 0 )

{

r.height += r.y;

r.y = 0;

}

r.width = Mathf.Min( 1 - r.x, r.width );

r.height = Mathf.Min( 1 - r.y, r.height );

}

Matrix4x4 m = cam.projectionMatrix;

// Matrix4x4 m1 = Matrix4x4.TRS( new Vector3( r.x, r.y, 0 ), Quaternion.identity, new Vector3( r.width, r.height, 1 ) );

Matrix4x4 m2 = Matrix4x4.TRS (new Vector3 ( ( 1/r.width - 1), ( 1/r.height - 1 ), 0), Quaternion.identity, new Vector3 (1/r.width, 1/r.height, 1));

Matrix4x4 m3 = Matrix4x4.TRS( new Vector3( -r.x * 2 / r.width, -r.y * 2 / r.height, 0 ), Quaternion.identity, Vector3.one );

cam.projectionMatrix = m3 * m2 * m;

}

private void FixedUpdate()

{

#if UNITY_EDITOR

var sceneView = SceneView.lastActiveSceneView;

if(sceneView != null)

{

var sceneViewCamera = sceneView.camera;

_camera.transform.localPosition = sceneViewCamera.transform.localPosition;

_camera.transform.localRotation = sceneViewCamera.transform.localRotation;

}

#endif

var sizeHalf = _planeSize * 0.5f;

var sizeHalfH = sizeHalf;// * 1.0f / _mainCamera.aspect;

var centerPosWS = _target.position + _pivot * -sizeHalf;

var centerPosVS = _camera.worldToCameraMatrix.MultiplyPoint(centerPosWS);

var posVPSs = (Span<Vector3>)stackalloc Vector3[4];

posVPSs[0] = _camera.projectionMatrix.MultiplyPoint(centerPosVS + new Vector3(-sizeHalfH, -sizeHalf, 0.0f));

posVPSs[1] = _camera.projectionMatrix.MultiplyPoint(centerPosVS + new Vector3( sizeHalfH, sizeHalf, 0.0f));

if(float.IsNaN(posVPSs[0].x) == true || float.IsInfinity(posVPSs[0].x) == true)

return;

posVPSs[0] = posVPSs[0] * 0.5f + new Vector3(0.5f, 0.5f, 0.0f);

posVPSs[1] = posVPSs[1] * 0.5f + new Vector3(0.5f, 0.5f, 0.0f);

var scissorRect = new Rect();

scissorRect.min = posVPSs[0];

scissorRect.max = posVPSs[1];

_forceCamera.rect = new Rect(0,0,1,1);

_forceCamera.ResetProjectionMatrix();

_forceCamera.transform.position = _camera.transform.position;

_forceCamera.transform.rotation = _camera.transform.rotation;

_forceCamera.fieldOfView = _camera.fieldOfView;

var forceTargetTex = _forceCamera.targetTexture;

_forceCamera.targetTexture = null;

var isAdjust = false;

SetScissorRect(_forceCamera, isAdjust, ref scissorRect);

posVPSs[0].Set(scissorRect.min.x, scissorRect.min.y, posVPSs[0].z);

posVPSs[1].Set(scissorRect.min.x, scissorRect.max.y, posVPSs[1].z);

posVPSs[2].Set(scissorRect.max.x, scissorRect.max.y, posVPSs[2].z);

posVPSs[3].Set(scissorRect.max.x, scissorRect.min.y, posVPSs[3].z);

_forceCamera.targetTexture = forceTargetTex;

var targetPosVS = _camera.worldToCameraMatrix.MultiplyPoint(_target.position);

var positions = _gbWorldPositions.LockBufferForWrite<Vector3>(0, 4);

for(int i = 0; i < 4; i++)

{

posVPSs[i].z = -centerPosVS.z;//-targetPosVS.z - sizeHalf;

positions[i] = _camera.ViewportToWorldPoint(posVPSs[i]);

}

Debug.DrawLine(positions[0], positions[1], Color.cyan);

Debug.DrawLine(positions[1], positions[2], Color.cyan);

Debug.DrawLine(positions[2], positions[3], Color.cyan);

Debug.DrawLine(positions[3], positions[0], Color.cyan);

_gbWorldPositions.UnlockBufferAfterWrite<Vector3>(4);

var depthPos = _target.position + _pivot * -_planeSize;

_material.SetVector(_spDepthPosWS, depthPos);

_compute.SetFloat(_spSimWidth, _simTexSize);

_compute.SetFloat(_spSimHeight, _simTexSize);

_compute.SetFloat(_spDeltaTime, Time.deltaTime);

_compute.SetFloat(_spAttenuation, _attenuation);

_compute.SetFloat(_spDeisityAttenuation, _deisityAttenuation);

_compute.SetFloat(_spTime, Time.time);

_compute.SetFloat(_spDensityWidth, _densityTexSize);

_compute.SetFloat(_spDensityHeight, _densityTexSize);

UpdateAdvection();

InteractionForce();

UpdateDivergence();

UpdatePressure();

UpdateVelocity();

UpdateDensity();

_forcePreview.texture = _forceTexture;

_velocityPreview.texture = _velocityBuffer.current;

_densityPreview.texture = _densityBuffer.current;

_material.SetTexture(_spVectorFieldTex, _velocityBuffer.current);

_material.SetTexture(_spDensityTex, _densityBuffer.current);

}

private void Update()

{

Graphics.DrawProcedural(_material, _bounds, MeshTopology.Quads, 4, 1, _camera);

}

// 移流

private void UpdateAdvection()

{

_compute.SetTexture(_knUpdateAdvection, _spSourceVelocity, _velocityBuffer.current);

_compute.SetTexture(_knUpdateAdvection, _spResultVelocity, _velocityBuffer.back);

_compute.Dispatch(_knUpdateAdvection, _velocityBuffer.width / 8, _velocityBuffer.height / 8, 1);

_velocityBuffer.Flip();

}

// 外力

private void InteractionForce()

{

_compute.SetTexture(_knInteractionForce, _spSourceForce, _forceTexture);

_compute.SetTexture(_knInteractionForce, _spResultVelocity, _velocityBuffer.current);

_compute.SetTexture(_knInteractionForce, _spResultDensity, _densityBuffer.current);

_compute.Dispatch(_knInteractionForce, _velocityBuffer.width / 8, _velocityBuffer.height / 8, 1);

}

// 発散

private void UpdateDivergence()

{

_compute.SetTexture(_knUpdateDivergence, _spSourceVelocity, _velocityBuffer.current);

_compute.SetTexture(_knUpdateDivergence, _spResultDivergence, _divergenceTexture);

_compute.Dispatch(_knUpdateDivergence, _divergenceTexture.width / 8, _divergenceTexture.height / 8, 1);

}

// 圧力

private void UpdatePressure()

{

// Horizontal

_compute.SetTexture(_knUpdatePressurePsfH, _spSourcePressure, _pressureBuffer.current);

_compute.SetTexture(_knUpdatePressurePsfH, _spResultPressure, _pressureBuffer.back);

_compute.SetTexture(_knUpdatePressurePsfH, _spResultDivergence, _divergenceTexture);

_compute.Dispatch(_knUpdatePressurePsfH, _pressureBuffer.width / 8, _pressureBuffer.height / 8, 1);

// Vertical

_compute.SetTexture(_knUpdatePressurePsfV, _spSourcePressure, _pressureBuffer.current);

_compute.SetTexture(_knUpdatePressurePsfV, _spResultPressure, _pressureBuffer.back);

_compute.SetTexture(_knUpdatePressurePsfV, _spResultDivergence, _divergenceTexture);

_compute.Dispatch(_knUpdatePressurePsfV, _pressureBuffer.width / 8, _pressureBuffer.height / 8, 1);

_pressureBuffer.Flip();

}

// 速度

private void UpdateVelocity()

{

_compute.SetTexture(_knUpdateVelocity, _spSourceVelocity, _velocityBuffer.current);

_compute.SetTexture(_knUpdateVelocity, _spSourcePressure, _pressureBuffer.current);

_compute.SetTexture(_knUpdateVelocity, _spResultVelocity, _velocityBuffer.back);

_compute.Dispatch(_knUpdateVelocity, _velocityBuffer.width / 8, _velocityBuffer.height / 8, 1);

_velocityBuffer.Flip();

}

// 密度

private void UpdateDensity()

{

_compute.SetTexture(_knUpdateDensity, _spSourceDensity, _densityBuffer.current);

_compute.SetTexture(_knUpdateDensity, _spSourceVelocity, _velocityBuffer.current);

_compute.SetTexture(_knUpdateDensity, _spResultDensity, _densityBuffer.back);

_compute.Dispatch(_knUpdateDensity, _densityBuffer.width / 8, _densityBuffer.height / 8, 1);

_densityBuffer.Flip();

}

}

Shader "Custom/Fire4"

{

Properties

{

_GradientTex ("Gradient Texture", 2D) = "white" {}

_DetailTex ("Detail Texture", 2D) = "white" {}

_FlowNoiseTex ("FlowNoise Texture", 2D) = "white" {}

_FlowNoiseScale ("FlowNoise Scale", Range(0.0, 1.0)) = 0.5

_FlowAnimLength ("Flow Animation Length", Range(0.0, 20.0)) = 4

_FlowStrength ("Flow Strength", Range(0.0, 1.0)) = 0.5

_DetailBlendScale ("Detail BlendScale", Range(0.0, 5.0)) = 2.0

_DetailAlphaScale ("Detail AlphaScale", Range(0.0, 5.0)) = 2.0

_SoftParticlesNearFadeDistance("Soft Particles Near Fade", Float) = 0.01

_SoftParticlesFarFadeDistance("Soft Particles Far Fade", Float) = 1.0

}

CGINCLUDE

#include "UnityCG.cginc"

struct appdata

{

uint vertexId : SV_VertexID;

uint instanceId : SV_InstanceID;

};

struct v2f

{

float4 posCS : SV_POSITION;

float2 uv : TEXCOORD1;

float4 posSS : TEXCOORD2;

};

sampler2D _VectorFieldTex;

float4 _VectorFieldTex_TexelSize;

sampler2D _DensityTex;

float4 _DensityTex_TexelSize;

sampler2D _GradientTex;

sampler2D _DetailTex;

float4 _DetailTex_ST;

StructuredBuffer<float2> _Vertices;

StructuredBuffer<float3> _WorldPositions;

sampler2D _FlowNoiseTex;

float _FlowNoiseScale;

float _FlowAnimLength;

float _FlowStrength;

float _DetailBlendScale;

float _DetailAlphaScale;

sampler2D _CameraDepthTexture;

float _SoftParticlesNearFadeDistance;

float _SoftParticlesFarFadeDistance;

float GetFlowDetail(float2 uv, float2 flowDir)

{

half phaseOffset = _FlowNoiseScale * tex2D(_FlowNoiseTex, uv).r;

float flowPhase0 = frac(phaseOffset + (_Time.y) / _FlowAnimLength);

float flowPhase1 = frac(phaseOffset + (_Time.y) / _FlowAnimLength + 0.5);

float flowLerp = abs(0.5 - flowPhase0) * 2;

float detail0 = tex2D(_DetailTex, uv * _DetailTex_ST.xy + flowDir * flowPhase0);

float detail1 = tex2D(_DetailTex, uv * _DetailTex_ST.xy + flowDir * flowPhase1);

return lerp(detail0, detail1, flowLerp);

}

ENDCG

SubShader

{

Tags

{

"RenderType" = "Transparent"

"Queue" = "Transparent"

}

ZWrite Off

Lighting Off

Blend SrcAlpha OneMinusSrcAlpha

Cull Off

Pass

{

ZTest Always

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

v2f vert(appdata v)

{

v2f o;

const uint vnum = 4;

uint vertexId = v.vertexId % vnum;

o.posCS = mul(UNITY_MATRIX_VP, float4(_WorldPositions[vertexId], 1.0));

const float2 uv[] =

{

float2(0, 0),

float2(0, 1),

float2(1, 1),

float2(1, 0)

};

o.uv = uv[vertexId];

o.posSS = ComputeScreenPos(o.posCS);

o.posSS.z = -mul(UNITY_MATRIX_V, float4(_WorldPositions[vertexId], 1.0)).z;

return o;

}

float4 frag(v2f i) : SV_Target

{

float4 col;

float2 vec = tex2D(_VectorFieldTex, i.uv);

float density = saturate(tex2D(_DensityTex, i.uv).a);

float2 flowDir = -vec * lerp(_FlowStrength * 0.3, _FlowStrength, 1.0 - density);

float detail = GetFlowDetail(i.uv, flowDir);

float gradiant = density - (1.0 - detail) * _DetailBlendScale;

col = fixed4(tex2D(_GradientTex, float2(gradiant, 0.5)).rgb, saturate((gradiant * _DetailAlphaScale) * density));

float invFadeDistance = 1.0 / (_SoftParticlesFarFadeDistance - _SoftParticlesNearFadeDistance);

float sceneZ = LinearEyeDepth(SAMPLE_DEPTH_TEXTURE_PROJ(_CameraDepthTexture, UNITY_PROJ_COORD(i.posSS)));

float softParticlesFade = saturate(invFadeDistance * ((sceneZ - _SoftParticlesNearFadeDistance) - i.posSS.z));

col.a *= softParticlesFade;

return col;

}

ENDCG

}

}

}

参考

NaiveSurfaceNetsで穴堀り

環境

Unity2022.2.14f1

概要

以前作ったNaiveSurfaceNetsでボックスを作り、球状に穴を開けるテストです。

以前作ったNaiveSurfaceNetsでボックスを作り、球状に穴を開けるテストです。

基本的に以前のコードのままですが、ComputeShaderに以下の穴を開ける関数と法線を算出する関数を追加しています。

モグラゲームとか作れそうです。

コード

#pragma kernel ChangeSdfVoxels

[numthreads(1, 1, 1)]

void ChangeSdfVoxels(uint3 id : SV_DispatchThreadID)

{

uint3 start = SpherePos - SphereRadius;

uint3 vpos = start + id;

if(vpos.x > SdfVoxelSize - 1 || vpos.y > SdfVoxelSize - 1 || vpos.z > SdfVoxelSize - 1)

return;

uint index = (vpos.z * SdfVoxelSize * SdfVoxelSize) + (vpos.y * SdfVoxelSize) + (vpos.x);

float value = 1.0 - (length(vpos - SpherePos) / (float)SphereRadius);

SdfVoxels[index] = max(value, SdfVoxels[index]);

}

#pragma kernel GenerateNormals

[numthreads(1024, 1, 1)]

void GenerateNormals(uint3 id : SV_DispatchThreadID)

{

if(id.x >= IndirectArgs[0] / 3)

return;

uint vindex = id.x * 3;

float3 vec0 = Vertices[Indices[vindex + 1]] - Vertices[Indices[vindex]];

float3 vec1 = Vertices[Indices[vindex + 2]] - Vertices[Indices[vindex]];

float3 normal = normalize(cross(vec0, vec1));

float QUANTIIZE_FACTOR = 32768.0;

int3 inormal = (int3) (normal * QUANTIIZE_FACTOR);

Normals.InterlockedAdd(normalStride * Indices[vindex + 0] + 0, inormal.x);

Normals.InterlockedAdd(normalStride * Indices[vindex + 0] + 4, inormal.y);

Normals.InterlockedAdd(normalStride * Indices[vindex + 0] + 8, inormal.z);

Normals.InterlockedAdd(normalStride * Indices[vindex + 1] + 0, inormal.x);

Normals.InterlockedAdd(normalStride * Indices[vindex + 1] + 4, inormal.y);

Normals.InterlockedAdd(normalStride * Indices[vindex + 1] + 8, inormal.z);

Normals.InterlockedAdd(normalStride * Indices[vindex + 2] + 0, inormal.x);

Normals.InterlockedAdd(normalStride * Indices[vindex + 2] + 4, inormal.y);

Normals.InterlockedAdd(normalStride * Indices[vindex + 2] + 8, inormal.z);

}

Shader "Unlit/GpuNaiveSurfaceNets2"

{

Properties

{

}

CGINCLUDE

#include "UnityCG.cginc"

#include "Lighting.cginc"

struct appdata

{

uint vertexId : SV_VertexID;

};

struct v2f

{

float4 posCS : SV_POSITION;

float3 normalOS : NORMAL;

float3 lightDirOS : TEXCOORD0;

};

StructuredBuffer<float3> Vertices;

StructuredBuffer<int3> Normals;

StructuredBuffer<uint> Indices;

sampler2D _MainTex;

float4 _MainTex_ST;

ENDCG

SubShader

{

Tags

{

"RenderType" = "Opaque"

"LightMode" = "ForwardBase"

}

LOD 100

Pass

{

CGPROGRAM

#pragma multi_compile_fwdbase

#pragma vertex vert

#pragma fragment frag

v2f vert (appdata v)

{

v2f o;

float3 vertex = Vertices[Indices[v.vertexId]];

float4 posOS = float4(vertex, 1.0);

o.posCS = UnityObjectToClipPos(posOS);

#if 0

// flat shading

uint vindex = v.vertexId - (v.vertexId % 3);

float3 vec0 = Vertices[Indices[vindex + 1]] - Vertices[Indices[vindex]];

float3 vec1 = Vertices[Indices[vindex + 2]] - Vertices[Indices[vindex]];

o.normalOS = normalize(cross(vec0, vec1));

#else

float QUANTIIZE_FACTOR = 32768.0;

int3 n = Normals[Indices[v.vertexId]];

o.normalOS = normalize(float3(n.x / QUANTIIZE_FACTOR, n.y / QUANTIIZE_FACTOR, n.z / QUANTIIZE_FACTOR));

#endif

o.lightDirOS = ObjSpaceLightDir(posOS);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

float3 normalOS = normalize(i.normalOS);

float3 lightDirOS = normalize(i.lightDirOS);

float NdotL = dot(normalOS, lightDirOS) * 0.5 + 0.5;

fixed4 col = fixed4(1,0,0,1);

col.rgb *= NdotL;

return col;

}

ENDCG

}

}

}

参考

SDFの視覚化(NaiveSurfaceNets) - テキトープログラム( ..)φメモ

Question - Calculating Normals of a Mesh in Compute Shader - Unity Forum

ユニティちゃんがいっぱい

環境

Unity2022.2.14f1

概要

以前作ったAnimationInstancing的なコードとComputeShaderを用いたSpringBoneで作ったものです。

手法はだいたい同じですが、SpringBoneはSphere(髪やスカートについているSpringColliderの値を使用したもの)とのコリジョン判定をして体にめり込まないようにしています。

顔の表情切り替えにも対応していて、VATを用いて切り替えています。

うちの非力なPC(GTX1660)でもSDユニティちゃんが1000体以上出ています。

表情や揺れものがない、シンプルなAnimationInstancingなら2000弱位出ていた気がします。

参考

Gizmos.DrawFrustumの使い方

環境

Unity2022.2.14f1

概要

ビューフラスタム(視錐台)を表示するデバッグ機能の使い方

コード

private void OnDrawGizmos()

{

Gizmos.color = Color.cyan;

Gizmos.matrix = Matrix4x4.TRS(_camera.transform.position, _camera.transform.rotation, new Vector3(_camera.aspect, 1.0f, 1.0f));

Gizmos.DrawFrustum(Vector3.zero, _camera.fieldOfView, _camera.farClipPlane, _camera.nearClipPlane, 1.0f);

// 指定距離のクリップ面のサイズ

float height = _cameraDistance * Mathf.Tan(_camera.fieldOfView * 0.5f * Mathf.Deg2Rad) * 2.0f;

Gizmos.DrawCube(Vector3.forward * _cameraDistance, new Vector3(height * _camera.aspect, height, 0.1f));

// クリップ面の高さから距離を求める

float distance = height * 0.5f / Mathf.Tan(_camera.fieldOfView * 0.5f * Mathf.Deg2Rad);

Gizmos.DrawLine(Vector3.zero, Vector3.forward * distance);

}